Davidson College’s AI Innovation Initiative released new guidance on artificial intelligence use this fall. In emails to faculty and staff, the Initiative recommended community members use Gemini and Amplify large language models (LLMs) as opposed to other models when they are already going to use AI, and introduced professors to Process Feedback, a Google Docs extension designed to monitor and analyze student writing.

The Initiative’s guidance comes amidst nationwide uncertainty about how AI will impact higher education. While some educators favor AI integration in the name of professional preparation, others have issued stark warnings that rushing to embrace AI will be catastrophic for academia.

Associate Dean for Data and Computing Laurie Heyer helps run the AI Innovation Initiative and played a key role in developing College recommendations on AI use. This fall, Heyer is working closely with Director of the College Writing Program Katie Horowitz to administer a pilot program for Process Feedback, a Google Docs extension that generates writing reports including analytics about active typing time, details about copy-pasted text and a playback of the typing process.

The writing department was looking for a tool that would allow professors to “focus on process,” according to Horowitz. The extension does just that. “To me, really, Process Feedback is a tool for helping students to better understand their writing process, and that’s the emphasis that I put on it,” Horowitz said.

Process Feedback also has implications for deterring AI use: it could give professors an additional layer of confidence that take-home exams are written by students—not ChatGPT. The extension allows users to see when text is added and removed, determine whether copy- pasted text came from outside the document and watch the writing process, word by word, from start to finish.

Associate Professor of Political Science Melody Crowder-Meyer intends to use Process Feedback to “make the friction of cheating higher,” comparing monitored take-home essays to the exam center. “My goal is to make my classes a place where the structure encourages people to do the hard work and force our brains to develop and not just take shortcuts.”

About 30 faculty members went to Process Feedback trainings led by Heyer and Horowitz. Five sections of Writing 101 are participating in the pilot program this year, according to Horowitz, and a growing number of professors in other departments have also expressed interest.“Pretty much any discipline where writing is involved […] is potentially interested in seeing how the writing happens, and helping their students see how their writing happens,” Heyer said.

Process Feedback may be particularly useful for professors like Daniel Layman. Layman, who chairs the philosophy, politics and economics (PPE) department, stopped giving students take-home essays in 2023 because he was concerned about cheating. He started using in-class blue-book exams and administered in-class typed exams monitored by a lock down browser last spring. But he said take-home exams are a uniquely valuable way to assess learning.

“[Take home exams are] clearly better in pretty much every pedagogical respect, with respect to developing clarity skills, developing argumentative skills, like editing, revising, slow, careful thought,” Layman said.

This semester, Layman is returning to take-home exams. For now, he is relying on “self-comprehension quizzes” to deter cheating. For professors who are hesitant to do the same, Process Feedback could provide reassurance that a student is behind the essay they submit—not an LLM or website.

As part of the pilot process, the AI Innovation Initiative will collect data on Process Feedback throughout the semester to understand how professors are using the tool. Some students have expressed concerns about how data collected by Process Feedback will be used.

But Heyer and Horowitz said they are confident in the tool’s data privacy protections: no data is collected by the company and everything remains local on the user’s device.

“We have disabled the function that would allow reports to be shared with Process Feedback, the company,” Heyer said. “Your data remains local, so it’s only visible to the people who you share your assignment with.”

Horowitz acknowledged that implementing Process Feedback will not be a one-size-fits-all process and may require students to communicate with professors about how they write best. “We absolutely should make allowances for students to work in ways that are most comfortable for them and that are most productive for them.”

Ultimately, Heyer said she is most excited about how Process Feedback can be used to help students— whether by ensuring students write the work they submit, or by shifting the emphasis from what they write to how they write it.

“It’s giving us an opportunity to emphasize what has always been the most important thing about education, and that is the process,” Heyer said.

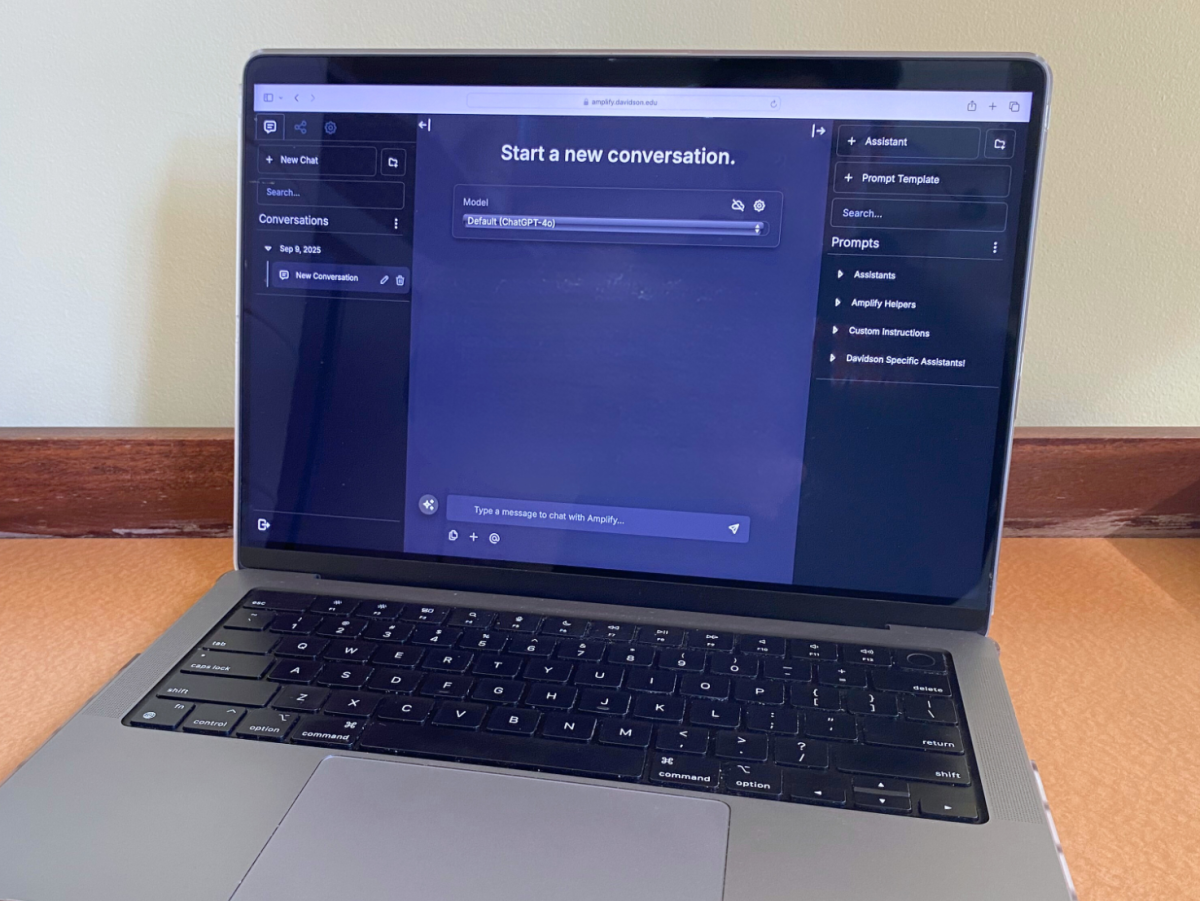

In addition to the Process Feedback pilot, the AI Innovation Initiative recently created a new webpage on artificial intelligence featuring guidance on how to approach integrating generative AI in the classroom, including information on potential uses and key concerns. It includes information about Amplify and Gemini, the College’s preferred LLMs for community use.

Developed by Vanderbilt University for use in higher education, Amplify allows students to send queries to various ChatGPT and Claude LLMs and includes a variety of custom features, according to the Davidson Technology and Innovation (T&I) website. Gemini is included in Davidson’s Google Workspace at no additional cost to the College, is subject to “the same data privacy protections already in place for tools like Google Drive and Google Docs,” according to the T&I website.

AI advocates and those who argue ignoring or banning it would be counterproductive appreciate the College’s guidance on AI. Professor of English Ann Fox, who wrote in an email that “AI should be handled with care, if at all,” said that recommending students use Amplify or Gemini over other LLMs does not mean she encourages them to use AI in the first place.

“I don’t encourage the use of AI,” Fox wrote. “I recommend Gemini and Amplify because those are the LLMs the College asks us to use […] I appreciate the guidance the College is currently providing in that regard, and the guidance we are being given to protect student data and our own materials.”